Inpainting ControlNet Alpha and Beta model for the FLUX.1-dev model released by the Alimama Creative Team works under Alibaba. The respective model weights fall under Flux dev non-commercial license.

Its trained on 12 million images from the laion2B dataset and other internal sources, all at a resolution of 768x768 and 1024x1024. This is the optimal size for inference; using other resolutions can lead to subpar results.

Whereas the Beta model trained on 15million image dataset in 1024x1024 resolution is more capable to generate modifications with detailed precision than the earlier one.

As reported officially the model is capable to enhance your image generation capabilities and gives you more advantage than the older SDXL inpainting.

Table of Contents:

Installation:

1. First install Comfyui. You also need to follow the basic Flux installation workflow if you have not yet.

2. From the Comfy Manager, click "Custom nodes Manager". Then, install "ComfyUI essentials" by Cubiq.

3.Now, next part is to download the checkpoints:

(a) Flux inpainting Alpha model(safetensors) weight from Alimama creative's Hugging face repository.

(b) This is the LoRA model variant Turbo Alpha is 8-step distilled Lora has been released that can also be downloaded from their Hugging face repository. This checkpoint can be used for Text-to-image and inpainting workflows.

(c) Another new variant Flux inpainting Beta has been released which can be downloaded from another Alimama's Hugging Face repository.

After downloading both, just save them inside "ComfyUI/models/controlnet" folder. You can rename it to anything relative like "Alimama-Flux-controlnet-inpainting-apha.safetensors" ,"Alimama-Flux-turbo-alpha.safetensors" and "Alimama-Flux-controlnet-inpainting-beta.safetensors" for managing the well structured workflow.

Workflow:

1. Download Controlnet Alpa workflow , Controlnet Alpha Turbo workflow and Controlnet Beta workflow from Alimama Creative Hugging face. This uses the native Flux Dev workflow. If you do not know how the basic workflow works then you can go through our tutorial for Flux workflows.

2. Drag and drop to ComfyUI. If you get red colored error nodes , then from Manager click "Install missing custom nodes". Restart and refresh your ComfyUI to take effect.

3. Choose "Flux.1 Dev" as the model weight from "Load diffusion model" node. Load ControlNEt inpainting model which you downloaded from "Load ControlNet model" node.

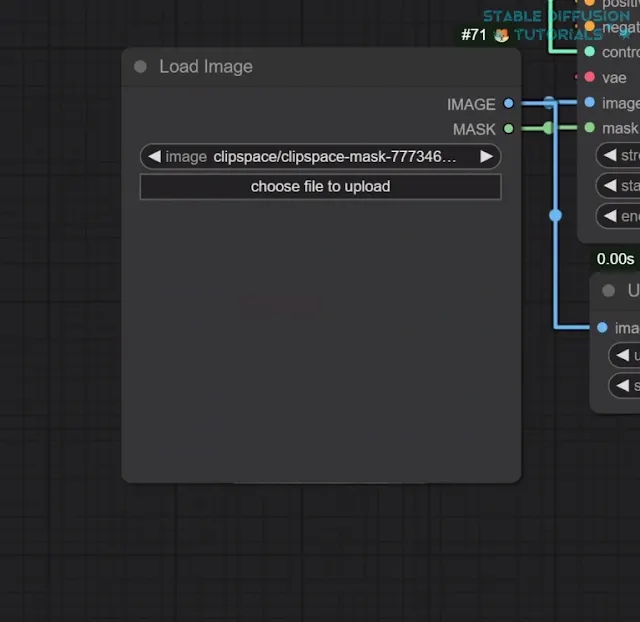

4. Load your target image in "Load image" node. Right click on the image and select option "Open in Masked Editor". Mask the area where you want to influence it and click "save to node".

5. Add descriptive positive prompts in "Clip text encode" node.

6. Configure the settings:

When you use the "t5xxl-FP16" and "flux1-dev-fp8" models for 28-step inference, you will encounter significant GPU memory usage(around 27GB). Here are some key insights to keep in mind:

Recommended settings for Alpha/Turbo-Alpha and Beta model:

Inference Time: With a configuration of CFG=3.5, you can expect an inference time of about 27 seconds. Choosing CFG=1 will help you to reduce that time to approximately 15 seconds when using with 1024 by 1024 resolution.

Acceleration: Another tip is to use the Hyper-FLUX-lora to speed up your inference times.

Parameter Adjustments: For optimal results, all the parameters for both models are given below-

| Alpha | Beta | Alpha turbo | |

|---|---|---|---|

| control-strength | 0.9 | 0.6-1.0 | |

| control-end-percent | 1 | 0.5-1.0 | |

| CFG | 3.5 | 1.0 or 3.5 | 3.5 |

For best performance, set the "controlnet_conditioning_scale" between 0.9 and 0.95.

6. At last , click "Run" button to initiate your image generation.

We tested twice to do the inpainting. For this, we used an image of girl wearing red funky glasses. Uploaded the image and masked the area. Here, we wanted to remove the glasses. So, we added prompt as followed.

First try: american beautiful adorable blue eyes

Second try: asian beautiful adorable eyes

Here, are the results. Not, so perfect as officially reported the model version is alpha but beta model generates more fine detailing. To get more perfection, you can mention the backgrounds, effects, and style of your image helps the Controlnet model to generate and add more realism.

Conclusion:

Alpha, Alpha-Turbo and Beta version have been released by Alimama team. As you will have sometime experience that many startups just only make money and create monopoly in this AI emerging arena. Its really helpful and amazing for the community that these tech giants are training their own ControlNet models and diving to the diffusion era.